It's not far away, you know. That promised land of photorealistic gaming. That long sought after holy grail of game graphics. But it's not going to be something created by auteur game developers, or even the mad geniuses behind the game engines of the future. No, as with everything in our imminent future, it will be generated by AI.

Wait! Before you click away because of the merest mention of an AI generated gaming future—I know, I know, I'm largely generative AI fatigued, too—this is actually potentially super exciting when it comes to actually having games that look kinda real.

If your algorithm looks anything like mine (and what a weird turn of phrase that is) then you'll have been seeing AI-generated gaming videos all over TikTok and YouTube, where traditional game capture is fed through a video-to-video generator and out the other end comes some seriously uncanny valley vids.

But they're also pretty spectacular, both from how they look now and from what they could mean for our future. If this sort of AI pathway could be jammed into our games as some sort of final post processing filter that's something to be excited about. These examples here are obviously not real-time AI filters—and that's going to be the real trick—they're just taking existing video and generating a more realistic version with that as the input reference point.

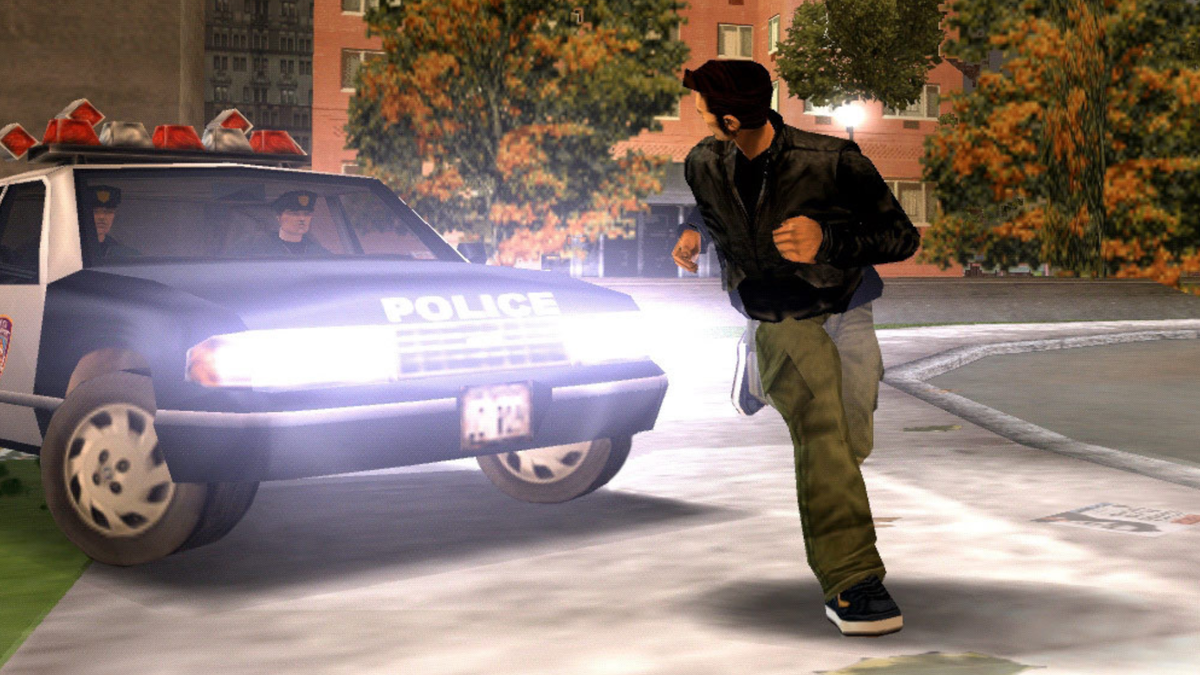

But when you look at what it does with Half-Life, Red Dead, and GTA, though, the results are pretty outstanding.

What's going on right now is content creators, such as YouTuber Soundtrick who produced these videos, are taking existing game footage and the running it through the Gen-3 video-to-video tool on Runway ML. It's then generating its own video based entirely off that input video, and people are taking a ton of different games and feeding them into the AI grinder to come out with 'remastered' or 'reimagined with AI' footage of the same games.

And, while they often look janky af, it's not hard to see the potential in this form of generative AI if it's given enough processing power and is somehow able to be done in real-time. Think of what it would look like as a post-processing layer in a game engine, a layer that could interpret a relatively basic input and produce a photorealistic finished product.

It's actually something that's kinda been bothering me as a football-liker and FIFA/FC player—why doesn't it look like the real thing, and how do we ever get there? With AI fed on the ton of televised football matches shown every second of every day around the world, there's more than enough data now to train a single model that could make the game look identical to a real-world football match.

Suddenly standard rendering gets turned on its head and ray tracing gets shown the door. Who needs realistically mapped light rays when you can get some artificial intelligence to make it up? I'll happily go back to faked lighting if it actually looks more realistic.

The actual GPU component of your graphics card will become secondary, needing only to do some rough rendering of low-res polygons with minimal, purely referential textures, just for the purposes of character recognition, motion, and animations. The most important part of your graphics card will then be the memory and matrix processing parts, as well as whatever else is needed to accelerate the probabilistic AI mathematics needed to rapidly generate photorealistic frames once every 8.33 ms.

Half Life with ultra-realistic graphics Gen-3 video to video Runway ML Artificial intelligence - YouTube

Okay, none of that is easy, or even definitively achievable when it comes to working with low-latency user inputs in a gameworld versus pre-baked video, but if that becomes our gaming reality it's going to be very different to the one we inhabit now.

For one, your gaming experience is going to look very different to mine.

At the moment, while the rendering is done in real time, our games are working with pre-baked models and textures created by the game developers and they are the same for everyone. It doesn't matter what GPU you use, or from which manufacturer, our visual experiences of a game from today will be the same.

If a subsequent generation of DLSS starts using a generative AI post processing filter then that will no longer be the case. Nvidia would obviously stick with its proprietary stance, which would use specific model and training data for its version, and that would end up generating different visual results to a theoretical open source version from AMD or Intel, which operated on differently trained models.

Hell, it being generative AI, even if you were using the exact same GPU there's a good chance you'd have a different visual experience compared with someone else.

And maybe that would be deliberately so.

Nvidia already has its Freestyle filters you can use to change how your games look depending on your personal tastes. Take that a little further down the AI rabbit hole and we could be in a situation where you're guiding that generative AI filter to really tailor your experience. And I'm kinda excited about what that would mean playing your old games with such technology, too.

GTA IV with ultra-realistic graphics Gen-3 video to video Runway ML Artificial intelligence - YouTube

Wanna replay GTA V, but have a hankering for more of a Sleeping Dogs vibe? Tell the filter that you want it to be set in Japan instead of Los Santos.

Because, theoretically—in my own made up future, anyway—you could layer the AI post processing over an older game and have yourself a pretty spectacular remaster. Or, more interestingly, have a completely different set of visuals. Wanna replay GTA V, but have a hankering for more of a Sleeping Dogs vibe? Tell the filter that you want it to be set in Japan instead of Los Santos. Want everybody to have the face of Thomas the Tank Engine? Sure thing. Why not replace all the bullets with paint balls while you're at it?

It'll put modders out of business, but it's also not going to go down well with game artists, either. Poor schmucks. But it'll put us in control of at least one aspect of a gameworld if we want to.

This is a future which doesn't feel all that far away from when I'm looking at photo realised versions of Professor Kleiner or Roman Bellic layered over their own character models. Though there are obviously some pretty hefty hurdles to leap before we get there. Memory will be a biggie. The models, in order to achieve the sort of latencies we're talking about here, would likely need to be local; stored on your own machine. And that means you're going to need a ton of storage space and memory to be able to run them fast enough. Then there's the actual AI processing itself. And as we get closer to such a reality there will probably be some even more janky, uncanny valley moments to suffer through, too.

But it could well be worth it. If only to replay Half-Life all over again.

4 months ago

183

4 months ago

183

![Anime Reborn Units Tier List [RELEASE] (November 2024)](https://www.destructoid.com/wp-content/uploads/2024/11/anime-reborn-units-tier-list.jpg)